A new study models how rainy conditions affect LiDAR sensors

According to researchers, the ability of autonomous vehicle LiDAR sensors to detect objects at a distance is proven to be less effective in heavy rain.

The Intelligent Vehicles Group at Warwick University published a study in the IEEE Sensors Journal, detailing how the researchers carefully simulated and evaluated LiDAR sensor's performance in the rain.

Original Equipment Manufacturers (OEMs) and technology firms claim that high-level autonomous vehicles (AVs) would increase road safety in addition to having positive economic and social effects. All high-level AVs have a strong reliance on sensors to function.

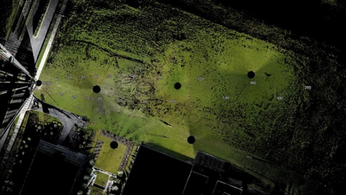

Researchers tested an autonomous vehicle's LiDAR sensors in various rain conditions while driving around a replica of Coventry's real roads in the WMG 3xD simulator. Since autonomous vehicles must have traveled millions of miles on the road, they are tested in a safe simulator.

LiDAR sensors work by emitting numerous narrow beams of near-infrared light with circular/elliptical cross sections. These should reflect off of objects in their trajectories and come back to the LiDAR sensor's detector.

If a LiDAR beam crosses a raindrop close to the transmitter and the raindrop reflects enough of the beam back to the receiver, the receiver will perceive the raindrop as an object. Droplets may also absorb some of the light being emitted, reducing the performance of the sensors.

STAY ON TOP OF THE NEWS

Join the free newsletter to receive the latest updates in your inbox.

The team claims that various probabilistic rain models (from none to various intensities) were applied to simulate rain in the WMG 3xD simulator. After measuring the responses from the LiDAR sensors, the researchers kept track of both false positive and false negative detections.

The research reveals that sensors had a harder time detecting objects as the intensity of the rain rose. Several raindrops were mistakenly reported within a close range (up to 50m) from the car. This had dropped in the medium range (50 m to 100 m), but as rainfall rose to up to 50 mm per hour, the sensors' ability to identify things diminished along with a wider range in distance.

According to Warwick’s Dr Valentina Donzella “the developed real-time sensor and noise models will help to further investigate these aspects, and may also inform autonomous vehicle manufacturers’ design choices, as more than one type of sensor will be needed to ensure the vehicle can detect objects in heavy rain.”

Dr Donzella also stated that future research will be needed to further investigate how to ensure LiDAR sensors can still detect objects sufficiently in noisy environments.

Abstract

The global Connected and Autonomous Mobility industry is growing at a rapid pace.

To ensure the successful adoption of connected automated mobility solutions, their safety, reliability and hence the public acceptance are paramount.

It is widely known that in order to demonstrate that L3+ automated systems are safer with respect to human drivers, upwards of several millions of miles need to be driven.

The only way to efficiently achieve this amount of tests in a timely manner is by using simulations and high fidelity virtual environments. Two key components of being able to test an automated system in a synthetic environment are validated sensor models and noise models for each sensor technology.

In fact, the sensors are the element feeding information into the system in order to enable it to safely plan the trajectory and navigate. In this paper, we propose an innovative real-time LiDAR sensor model based on beam propagation and a probabilistic rain model, taking into account raindrop distribution and size.

The model can seamlessly run in real-time, synchronised with the visual rendering, in immersive driving simulators, such as the WMG 3xD simulator.

The models are developed using Unreal engine, therefore demonstrating that gaming technology can be merged with the Automated Vehicles (AVs) simulation toolchain for the creation and visualization of high fidelity scenarios and for AV accurate testing.

This work can be extended to add more sensors and more noise factors or cyberattacks in real-time simulations.

LiDAR INSIGHTER Newsletter

Join the newsletter to receive the latest updates in your inbox.